The term Wearable technology refers to clothing and accessories incorporating computer and advanced electronic technologies. The designs often incorporate practical functions and features, but may also have a purely critical or aesthetic agenda.

Other terms used are wearable devices, wearable computers or fashion electronics. A healthy debate is emerging over whether wearables are best applied to the wrist, to the face or in some other form.

Smart watches

A smart watch is a computerized wristwatch with functionality that is enhanced beyond timekeeping. A first digital watch was already launched in 1972, but the production of real smart watches started only recently. The most notable smart watches which are currently available or announced are listed below :

Android Wear

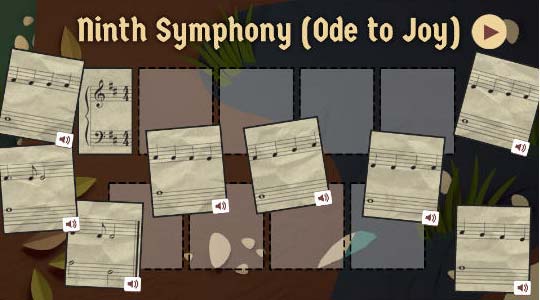

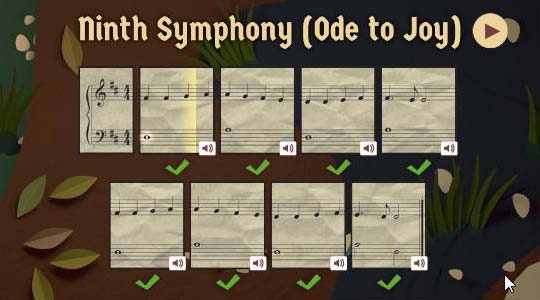

On March 18, 2014, Google officially announced Android’s entrance into wearables with the project Android Wear. Watches powered by Android Wear bring you :

- Useful information when you need it most

- Straight answers to spoken questions

- The ability to better monitor your health and fitness

- Your key to a multiscreen world

An Android Wear Developer Preview is already available. It lets you create wearable experiences for your existing Android apps and see how they will appear on square and round Android wearables. Late 2014, the Android Wear SDK will be launched enabling even more customized experiences.

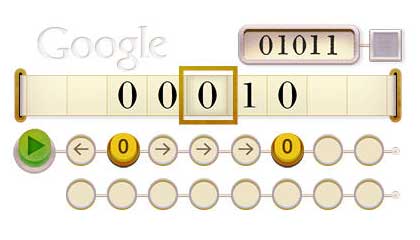

Google Glass

Google Glass

Google Glass is a wearable computer with an optical head-mounted display (OHMD). Wearers communicate with the Internet via natural language voice commands. In the summer of 2011, Google engineered a prototype of its glass. Google Glass became officially available to the general public on May 15, 2014, for a price of $1500 (Open beta reserved to US residents). Google provides also four prescription frames for about $225. Apps for Goggle Glass are called Glassware.

Tools, patterns and documentation to develop glassware are available at Googles Glass developer website. An Augmented Reality SDK for Google Glass is available from Wikitude.

Smart Shirts

Smart shirts, also known as electronic textiles (E-textiles) are clothing made from smart fabric and used to allow remote physiological monitoring of various vital signs of the wearer such as heart rate, temperature etc. E-textiles are distinct from wearable computing because emphasis is placed on the seamless integration of textiles with electronic elements like microcontrollers, sensors, and actuators. Furthermore, E-textiles need not be wearable. They are also found in interior design, in eHealth or in baby brathing monitors.

At the Recode Event 2014, Intel recently announced its own smart shirt which uses embedded smart fibers that can tell you things about your heart rate or other health data.