last update : August 16th, 2011

A Web cache sits between one or more Web servers (also known as origin servers) and a client or many clients, and watches requests come by, saving copies of the responses — like HTML pages, images and files (collectively known as representations) — for itself. Then, if there is another request for the same URL, it can use the response that it has, instead of asking the origin server for it again.

Web caches are used to reduce latency and to reduce network traffic. A very useful tutorial about web caches has been published by Mark Nottingham under a creative common licence.

An appreciated tutorial about wordpress caching has been posted by Kyle Robinson Young in Web Development Tutorials.

A cached representation is considered fresh if it has an expiry time or other age-controlling header set and is still within the fresh period, or if the cache has seen the representation recently, and it was modified relatively long ago. Fresh representations are served directly from the cache, without checking with the origin server.

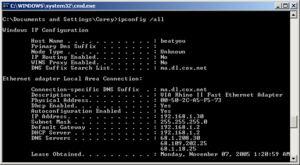

HTTP headers are sent by the server before the HTML, and are only seen by the browser and by any intermediate caches. Typical HTTP 1.1 response headers might look like this:

HTTP/1.1 200 OK

Date: Fri, 30 Oct 1998 13:19:41 GMT

Server: Apache/1.3.3 (Unix)

Cache-Control: max-age=3600, must-revalidate

Expires: Fri, 30 Oct 1998 14:19:41 GMT

Last-Modified: Mon, 29 Jun 1998 02:28:12 GMT

ETag: "3e86-410-3596fbbc"

Content-Length: 1040

Content-Type: text/html

The Expires HTTP header is a basic means of controlling caches; it tells all caches how long the associated representation is fresh for. After that time, caches will always check back with the origin server to see if a document is changed. Expires headers are supported by practically every cache. One problem with Expires is that it’s easy to forget that you’ve set some content to expire at a particular time. If you don’t update an Expires time before it passes, each and every request will go back to your Web server, increasing load and latency.

HTTP 1.1 introduced a new class of headers, Cache-Control response headers, to give Web publishers more control over their content, and to address the limitations of Expires. The most important response is max-age=[seconds]. It specifies the maximum amount of time that an representation will be considered fresh. This directive is relative to the time of the request, rather than absolute. [seconds] is the number of seconds from the time of the request you wish the representation to be fresh for.

A tool (REDbot) to check Cache Control and HTTP headers has been made available by Mark Nottingham; a public instance is available at rebdbot.org.